Monday, September 28, 2009

RA Free Time

Thursday, June 4, 2009

Seminar: Heterogeneous GPU Computing

The topic of the seminar was on the practical application of the GPU, a microprocessor fully optimized for common graphical applications, in computing parallel tasks. The GPU can be thought of as an analog to the CPU for any modern video card that a consumer can go out and buy at the store.

A large part of the talk was used to explain the differences between a CPU and a GPU. In both cases, the architecture of the silicon is crafted in such a way to allow for processing of what's known as "atomic" statements. As the name might imply, atomic statements are instructions that the CPU is able to process naturally, without any need for breaking the instruction down into more basic instructions. Atomic statements are therefore "basic" instructions.

A general purpose CPU like the Intel Core i7 is crafted so that it can, with a little extra work, compute just about any problem that a piece of software or hardware requires. A GPU on the other hand, like the NVidia GTX 295, is crafted so that it can quickly work on mathematical problems like matrix math. This is due to the fact that the graphics being displayed on a monitor, whether it be the Windows desktop or a rendered scene from a video game, is the result of numerous mathematical calculations on geometric objects. So instead of the problem being broken down into basic parts on the Core i7 (which adds considerable computational overhead), the problem can be immediately processed as is on the GTX 295.

For parallel computing tasks, such a math-oriented environment is ideal. Many parallel computing problems require the hardware to spend enormous amounts of time crunching floating-point numbers. Adding to the computational power is the amount of cores on a modern GPU; the current NVidia Tesla GPU contains 240 cores dedicated to processing. Naturally, the Tesla GPU outperforms the Core i7 almost threefold in computationally intensive tasks.

Of course, the disadvantage to the GPU implementation is its utterly abysmal performance on crunching general computational. The CPU is designed for a general case in mind, after all. An additional note that wasn't touched upon in the seminar is the misconception regarding a GPU's performance compared to a CPU. It seems that the amazing threefold advantage can only be achieved by problems termed "embarrassingly" parallel, or problems that are (laughably) easy to break down into parallel computing components.

I guess number-crunching fits that bill quite well.

Monday, June 1, 2009

Longer Lasting Digital Memory

In an era where a sizable portion of the world's literature is stored in some digital form or medium, the relative shelf life of such mediums leave a lot left to be desired. The irony is that while efforts in "archiving" the printed medium of centuries long past were intended to preserve such works, the printed medium will more than likely outlast the digital collection it has been copied into. This is due to theoretical limitations in utilizing semi-conductors for such tasks.

Researchers have discovered an alternative to the traditional semi-conductor approach by using some modern techniques in the field of nanotechnology. Digital information is usually stored in the medium as a machine-readable set of 1's and 0's. By storing an iron nanoparticle inside a carbon nanotube in one of two positional states, one can induce the iron to move between the states in the presence of electricity. This can effectively represent the machine-readable 1's and 0's required by the system.

Greater storage space can be achieved by packing components of the digital medium into dense clusters. This relationship is directly proportional: the greater the density of the medium given a physical space, the greater the storage offered. Unfortunately, semi-conductor placement also have an inversely proportional relationship to its shelf-life: the greater the density of the medium, the less the shelf-life will be. The nanotube system is believed to be relatively stable in this regard, as nanotubes can be packed as densely as needed while yielding the same shelf-life: over a billion years.

Nanoscale Reversible Mass Transport for Archival Memory [Nano Letters, ACS Publishing]

Sunday, May 31, 2009

A planet like ours?

Although a planet like Earth has been discovered, it doesn’t seem like we will be seeing it any time soon. With our current technology, it would take a couple hundred of years to travel 41 light-years. It’s pretty amazing that we’re able to observe something that far in the first place. Despite this fact, three programs are being put in place to help achieve this goal one day. It’s amazing how far we’ve come in terms of the exploration of space. It’s interesting too how Marcy has fully planned out what to do if there ever is a situation when we humans interact with extraterrestrial beings. It really is something special to be able to live through the making of history.

Sunday, May 24, 2009

Cell Phone Viruses

http://www.sciencedaily.com/releases/2009/05/090521161531.htm

Nationwide Academic Experiment

In an attempt to clean up America’s education system, the US government is going to choose a small group of states to participate in an intensive training program – which includes a 5 billion dollar budget. As stated by the nation’s top education, I agree with him when he proposed a nation wide educational program. America is one country, not 50 individual ones. So why do we have 50 separate systems for education; all which differ slightly. However, California’s chances are not looking to great in the overall view. Although many superintendents are pushing for California’s enrollment in the program. However, with budget cuts which lead to less program and instructional hours, it won’t look like California will be helping their lower performing students succeed.

I find it very ironical that states, which need financial help to boost performance, are required to show proof of their success – which is not evident due to the lack of funding in the first place. This is the exact scenario that California is in right now. California desperately needs the extra funding to help the state get back on its feet, but most likely won’t be able to receive it due to the lack of funding in the first place. It’s unthinkable that Governor Schwarzenegger is proposing to reduce the school year by a week. A whole week doesn’t seem a lot, but for a student just entering high school, by the time s/he graduates, that will be one full month of instructional teaching that s/he loses. In my opinion, the government should split the budget according to the state’s population and distribute the funds to all of them.

Source: http://www.sfgate.com/cgi-bin/article.cgi?f=/c/a/2009/05/22/BAR617PKQK.DTL

Google for Music

Electrical engineers at UC San Diego are working on their music identification system. What adds a new twist to the formula is their efforts at taking a general, genre based approach at music selection. For example, when a user searches for "easy listening", the system will attempt to identify every song in its database that it determines to be "easy listening" and return the results as a recommended list to the user. The key point, of course, is to understand that the software (and to some extent, hardware) will be able to determine the genre by analyzing the digital information that represents the music.

Apparently, the system had trouble with Queen's Bohemian Rhapsody. I can't blame it.

The system will need initial parameters in order to determine what types of music fit into what types of genres. The researchers originally paid students to listen to songs and label them manually, but switched to a new model that involved members of the social-networking site Facebook playing a series of games which accomplishes the same objective. Named Herd-It, the game involves users listening to music, identifying instruments, and finally labeling the songs in order to earn points in a high score table. The closer a player's submission matches the normative answers among all players of the game, the higher the score.

The researchers are also saving money. Hiding a research effort in the guise of a game allows them to utilize a human computer farm at the cost of a few hours of programming.

From A Queen Song To A Better Music Search Engine [Science Daily]

Sunday, May 17, 2009

Fire Ants

Fire ants are a pesky sub species of ants which sting to kill instead of just biting like normal ants. They inject a venom which gives a similar effect to being stung by a wasp. Within the past few years, the population of fire ants in the United States has exponential increased. Many researchers say that it’s specifically coming from Mexico and bringing an even stronger venomous sting.

Fire ants, unlike normal ants, are widely accepted as a non beneficial species. Thus, control has been attempted and strived for in order to manage the species’ population. A discovery has been made where a special fly named the phorid flies. These flies are able to easily kill off fire ants through injecting larva eggs into the fire ant’s brain. The larva eats away at the fire ant’s brain and severs the head off by releasing an acid that eats away at the neck. While still in the head, the larva will then hatch, using the hollowed head as shelter, after about 40 days. This process, although sounds great, is an extremely slow process for the amount of fire ants already in existent. One of the more interesting details about the process is that the larva, after injected into the brain, is actually able to control the fire ants. This is one of the few forms of the concept of zombies.

A group of professors have released two separate batches of these phorid flies in the United States last year in hopes of helping control the fire ant population. If the flies fail, there are scientists currently working on alternative methods of killing off the fire ants, such as fungi and viruses.

Source: http://news.nationalgeographic.com/news/2009/05/090515-zombie-ants-flies.html

Saturday, May 16, 2009

Life's Rocky Road: The History of Life on Earth

Monday, May 11, 2009

Do Biofuels Really Help Save the Environment?

Sunday, May 10, 2009

UCB Hacked

One of the servers at The University of California Berkeley (UCB) has been compromised by hackers. This was not discovered until April 9th of last month and has been going on since October 6th of 2008. It’s amazing how the hackers were able to maintain control of the servers for such a long time of such a big educational institute. It was discovered, by tracing and technological forensic experts, that the hackers originated from China.

The hackers were discovered when a system maintenance worker discovered a message left behind by the hackers. Apparently, it is common for hackers to leave behind secret hidden messages that tell the victim they are being hacked, similar to provoking or playing with the pray. I don’t really understand why they would want to give away the fact that the victim is being hacked since the hackers could have probably maintained control of the server if there were no traces left behind.

It appears that similar incidents has happened before where information has been stolen from UCB, however those cases usually were resolved before anything too major happened. This time, it seems like the thefts got away with 97,000 social security numbers of the staff, facility, and students there. It is also interesting to note that the notifying email sent out to the students and staff warning them of the security breach was not sent out until two days after the discovery of the hacking. Something as major as identity theft should be reported immediately to the victims.

Source: http://tech.yahoo.com/news/ap/20090508/ap_on_hi_te/us_tec_uc_data_theft

USB 3.0

The new USB standard, published at the end of last year and expected to first hit markets at the end of 2009 or early 2010, is a significant improvement over the previous and current version, 2.0. USB 3.0, dubbed “Super-Speed” (as opposed to the previous “High-Speed”), has several important features that will allow it to be adapted into the marked. Probably one of the most important features is backwards compatibility: All current 2.0 devices are usable with the new system. This is accomplished by making two sub systems: one is the 3.0, and one is the 2.0. The two systems are pin compatible, so they share the same USB port. Any 2.0 will be usable with 3.0, and vice versa.

The new USB standard, published at the end of last year and expected to first hit markets at the end of 2009 or early 2010, is a significant improvement over the previous and current version, 2.0. USB 3.0, dubbed “Super-Speed” (as opposed to the previous “High-Speed”), has several important features that will allow it to be adapted into the marked. Probably one of the most important features is backwards compatibility: All current 2.0 devices are usable with the new system. This is accomplished by making two sub systems: one is the 3.0, and one is the 2.0. The two systems are pin compatible, so they share the same USB port. Any 2.0 will be usable with 3.0, and vice versa.Probably the most notable difference with the new standard is the high speed: 5Gbits/s! Compared to the previous 480 Mbits/s, the speed increase is substantial. The increase is accomplished by adding four more wires to the cable. This will make 3.0 cables substantially larger than the previous 2.0 version, enough so that the different cables can be visually separated.

Another notable change is in the power delivery capabilities of the system. In the prior version, 2.0, devices were guaranteed a maximum of 500 ma, with more negotiable. The threshold has now been raised to 900 ma, with more negotiable. The increase is in recognition of the fact that more and more embedded systems are using USB to charge batteries.

Source: “USB 3.0 SuperSpeed” by José Luis Rupérez Fombellida, published in the May 2009 elector.

Cody

Sunday, May 3, 2009

Invisibility Cloaks

Proofreading CPS

Cyber-physical systems (CPS) encompasses all instances of interaction between a physical object and some computational portion of that object in the modern world. A specific example of a CPS would be the interaction between an airplane and its collision detection system.

Cyber-physical systems (CPS) encompasses all instances of interaction between a physical object and some computational portion of that object in the modern world. A specific example of a CPS would be the interaction between an airplane and its collision detection system.As with any piece of computer software, a given design with an apparent flaw will ideally be fixed to working order in while still in the design stage. If the flaws proceed into the manufacturing state, then the only way to find those flaws would be to employ trial-and-error. In the case of an airplane collision detection system, such trials would be expensive, time-consuming and ultimately impractical.

A research team at Carnegie Mellon have developed a piece of software that, provided with some initial parameters, will attempt to find a counterexample which illustrates a flaw in a given design. For example, an airplane on a collision course with another airplane will recieve evasion instructions from its collision detection system in order to avoid a universally fatal plane crash. The software, after recieving the parameters of the collision detection system, will attempt to find a scenario where the two planes will crash into each other.

Employing typical "brute-force" methods to this problem would be a computational nightmare, if not outright impossible. This is due to the infinitely many variables present in the real world that can affect the outcome of the system. The method employed in the software attempts to bypass this difficulty by extrapolating "differential invariants", or basic pieces of the problem that never change regardless of the variables. Using the many differential invariants in the collision problem, the software will attempt to piece together a counterexample or prove that the design is sound when no such counterexample can exist.

The latter is obviously the difficult part.

Method For Verifying Safety Of Computer-controlled Devices Developed [Science Daily]

Magazine Rundown

IEEE Spectrum: This magazine is the IEEE general interest magazine. It lacks the significant depths of the more specific journals, but it generally has interesting articles of a tech forum category.

IEEE Spectrum: This magazine is the IEEE general interest magazine. It lacks the significant depths of the more specific journals, but it generally has interesting articles of a tech forum category.

Scientific American: This magazine is a bastion of the science magazines that I read. It’s a little too Pop-Sci to be truly reputable, but it has intermediate level articles that with some dedication can be well to read.

Scientific American: This magazine is a bastion of the science magazines that I read. It’s a little too Pop-Sci to be truly reputable, but it has intermediate level articles that with some dedication can be well to read.

Make Magazine: This magazine (really almost a soft cover book) is a hobbyist technology magazine. It’s almost what Popular Mechanics was back in the day, before it was corrupted by bad articles. Make has many unique and interesting projects that, while I’ll never build most of them, are inspiring to do something of my own. Add in the culture that they nourish, and it’s an enjoyable read. Think Portland.

Make Magazine: This magazine (really almost a soft cover book) is a hobbyist technology magazine. It’s almost what Popular Mechanics was back in the day, before it was corrupted by bad articles. Make has many unique and interesting projects that, while I’ll never build most of them, are inspiring to do something of my own. Add in the culture that they nourish, and it’s an enjoyable read. Think Portland.US Pollution

Although there have been plans to help reduce and prevent pollution, there are limitations that the town does not have control over. It’s also known that cleaning up the air and adding regulations and the sort costs a large amount of fees. Even though corporations like Apple and Google are adapting green policies, cities around America will continue to pollute itself.

Monday, April 27, 2009

The Hephaestian

Sunday, April 26, 2009

Online Privacy

Due to a creative interpretation of German copyright laws, various record label companies are now able retrieve personal information from internet service providers and take legal action. One of the most prominent demonstrations of this recently occurred last week. The popular website Rapidshare has been forced to hand over logs of their uploader's IP addresses. Ranked as the 14th top site from Alexa, Rapidshare is a dedicated one-click file hosting service. Already, a man has been apprehended for uploading Metalica’s new album Death Magnetic to Rapidshare a day before its schedule release date.

However, this does not only apply to music labels. The movie industry and various other right holders also has started making their move on taking advantage of this new interpretation of the law in Germany. This can extend to not only services like Rapidshare but also the bittorent community in Germany. Especially after the verdict of The Pirate Bay trial, bittorent has been a hot topic lately. Numerous bittorent communities have already closed, most of which were voluntarily, due to the fear of being prosecuted.

Ironically, Lars Ulrich of Metallica admitted to downloading one of his own albums from bittorent. A former anti-piracy advocate, Ulrich now admits to the changing times of the internet. Although he says this after he tries piracy for the first time, Ulrich finds it strangely bizarre.

In our current age of internet technology, privacy online is just as important as in real life. Not having full control of where our personal information goes is a serious issue that is bound to grow larger as the internet progresses. Hopefully, the German law will be revised and fixed up to protect online users.

Sources:

http://www.alexa.com/topsites

http://torrentfreak.com/rapidshare-shares-uploader-info-with-rights-holders-090425/

http://torrentfreak.com/metallica-frontman-pirates-his-own-album-090305/

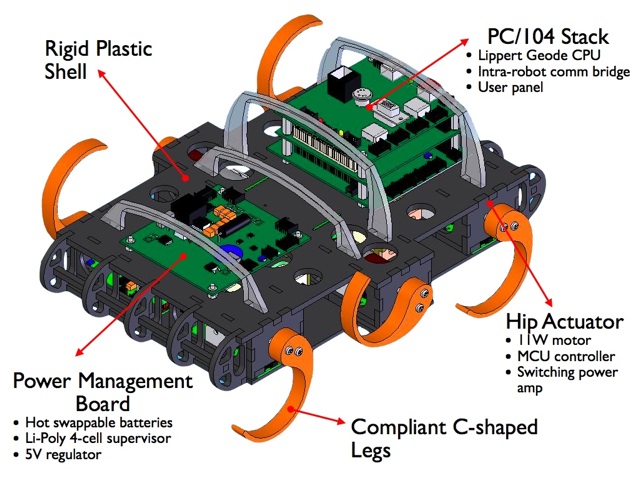

Sandbots

Sand is a difficult place for a robot to maneuver. It requires a different kind of design from the traditional. Consider the three most common forms of transportation today: wheels, tracks, and legs. Each of these fail in a sand environment. In the case of the tracks and wheels, they dig into the sand and freewheel. In the case of the legs, they delve deep into the sand, and movement becomes prohibitively expensive. The authors present an alternate method of movement across sand: a crescent shaped ‘leg’ that spins. Six per vehicle, the legs propel the robot by pushing it along, in a walking pattern of two legs and one (tripod style). The authors tested their vehicle in a unique setup to simulate sand without the mess. They filled a tank with poppy seeds, and placed air nozzles on the bottom so that they can agitate the sand to just the density that required.

The interesting thing about this project is that the authors are designing their robot using the bionic principle. They’ve figured out that there is a problem of robots failing in sand, and have looked to nature for an example. The crab was a particular inspiration, as well as the zebra-tailed lizard.

One thing that I would have liked to see addressed in the article is what a robot that is either a sled design or a mono-wheel design would do in such an environment. Humans have used sleds to travel overland for thousands of years, so that might be a good starting point for another robotic design. Perhaps a pair of sleds where one pushes the other forward, and then they switch roles?

Error and Quantum Sensors

Continuing along the quantum computing tour path, researchers have been able to harness a glaring shortfall of quantum computing methodology and morph it into something practical. The premise is so simple that you would have to wonder why the research didn't take place much sooner.

Continuing along the quantum computing tour path, researchers have been able to harness a glaring shortfall of quantum computing methodology and morph it into something practical. The premise is so simple that you would have to wonder why the research didn't take place much sooner.As with conventional computers, quantum computers are vulnerable to random noise in their quest to process data. The popular design is to use and alter the fundamental unit of quantum mechanics, the atom, to represent data in a meaningful and ultimately useful way. Unfortunately, random noise for a quantum computer can be anything from the heat of the sun to the movement of electrons in the air that the quantum computer is sitting in.

A immediately practical application for such levels of sensitivity is to use as a core for an extremely tiny, atom-sized sensor. Such "quantum sensors" would have the ability to detect natural occurrences several orders of magnitude smaller than what would currently be considered "undetectable". The article mentions, as an example, tiny magnetic waves emanating from the ocean floor that may indicate untapped oil reserves.

The Oxford researchers named their system the "quantum cat", after Schrödinger's thought experiment involving a box, a cat and a vial full of lethal poison. Perhaps the most interesting (and ironic) part of the story is the paradigm shift required in the manufacture of quantum computers.

"Many researchers try to make quantum states that are robust against their environment," said team member Dr Simon Benjamin of Oxford University’s Department of Materials, "but we went the other way and deliberately created the most fragile states possible."

Quantum Cat’s 'Whiskers' Offer Advanced Sensors [Science Daily]

Puijila Darwin

Sunday, April 19, 2009

Cybernetic Security

Most people however, agree that our digital information security is one of the most important issues that we are facing now. Greg Nojeim of the Center of Democracy and Technology stated that the bill is extremely vague and would greatly broaden powers in favor of the government while another one argued that the American public must have their private information protected. In our day and age, our digital selves are just as important as in real life. It was pointed out that cyber security on certain networks such as people’s electric, banking, health, and traffic records are prone to an attack. These attacks, if carried out, would have a large effect on the American public as a whole. Not only would private information be destroyed or leaked but the trust in the American government would greatly be damage. Despite his beliefs, Nojeim admits to the importance of the advantages of the bill but strongly urges Congress to modify the bill.

USC Researchers Develop 3D Display

Friday, April 17, 2009

Schizophrenia and Disconnectivity in the Brain